Lesson 3 - Getting Started With VR Development

Prepare For VR Development

1. Create a new folder on Desktop and give it a name, say “Lab3”.

2. Create a new project at the position of “Lab3”

3. In this lab, we start to introduce how to develop VR software/game with Unity3D.

4. As you have known how to use Unity3D and write script, some of the steps will not be shown in detail. These contents are all from this website. You can find more about the API from Unity3D official website.

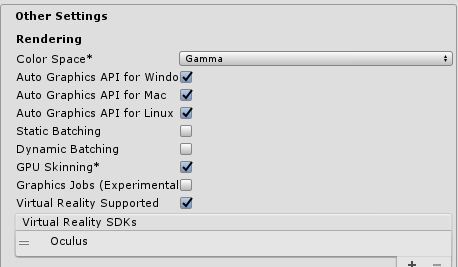

5. To start using VR, you need to enable VR supporting. Click Edit > Project Setting > Player > XR Setting and enable the Virtual Reality Supported.

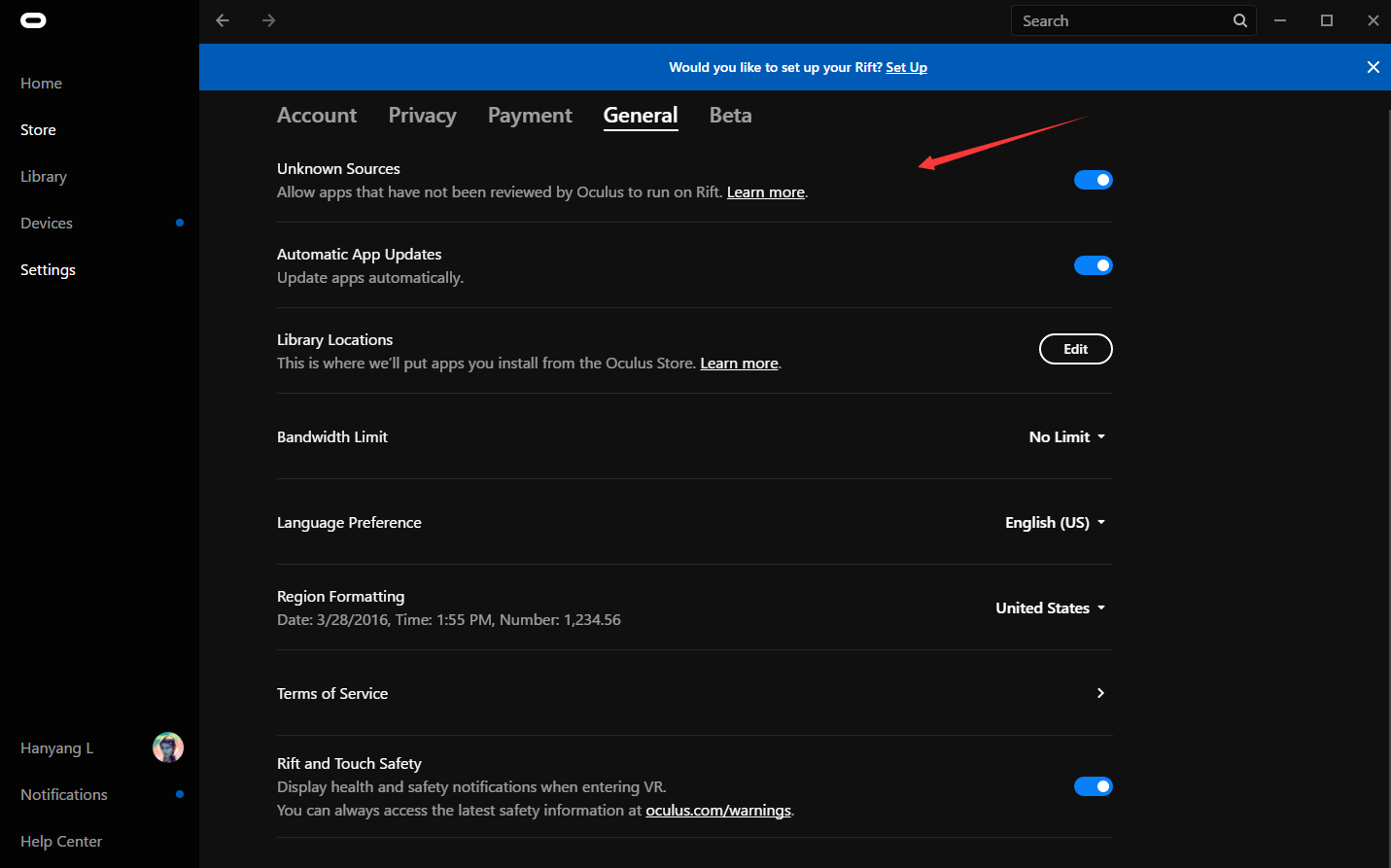

6. You also need to download the Oculus Setup software and connect your Oculus to your computer before starting doing this lab. What’s more, remember to go to Oculus > Setting > General > Unknown Source. You need to enable Unknown Source, or you cannot preview your project with Oculus DK2.

7. Before you start this project, you need to download the VRBox unitypackage. Then you need to import this package into your project. We will use some assets of them to build your first VR project. In this package, there are some scripts prepared by Unity3D itself.

Interactions In VR

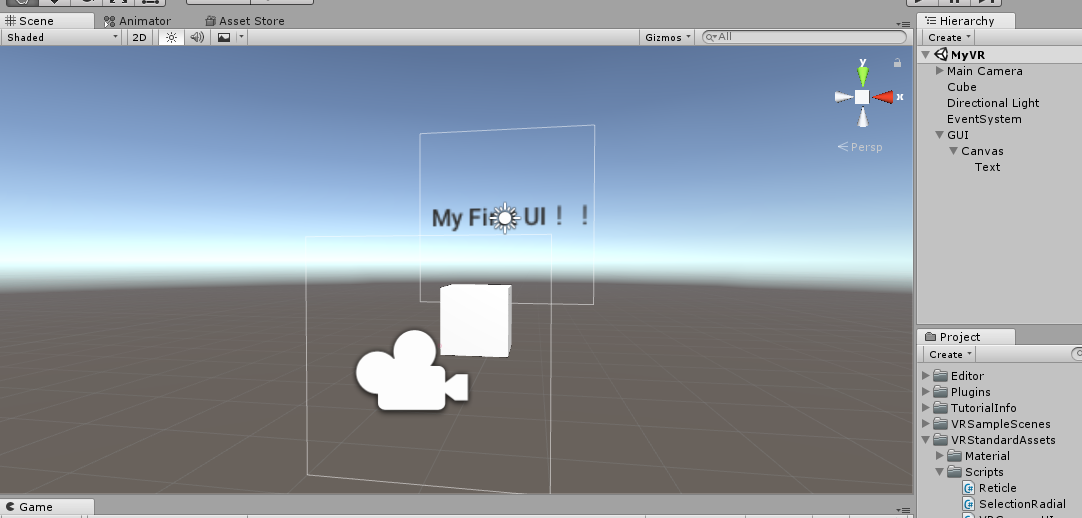

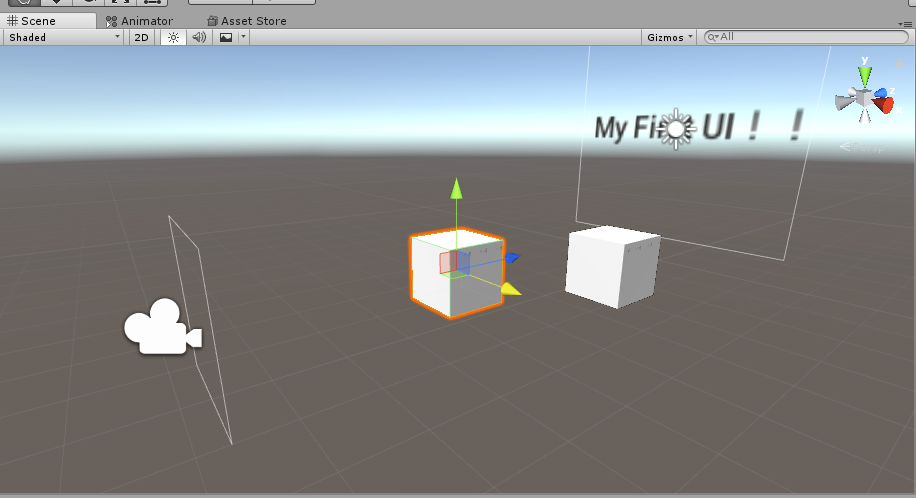

8. Now we start to introduce the interaction in VR world. You need to create a new scene named as “MyVR”.

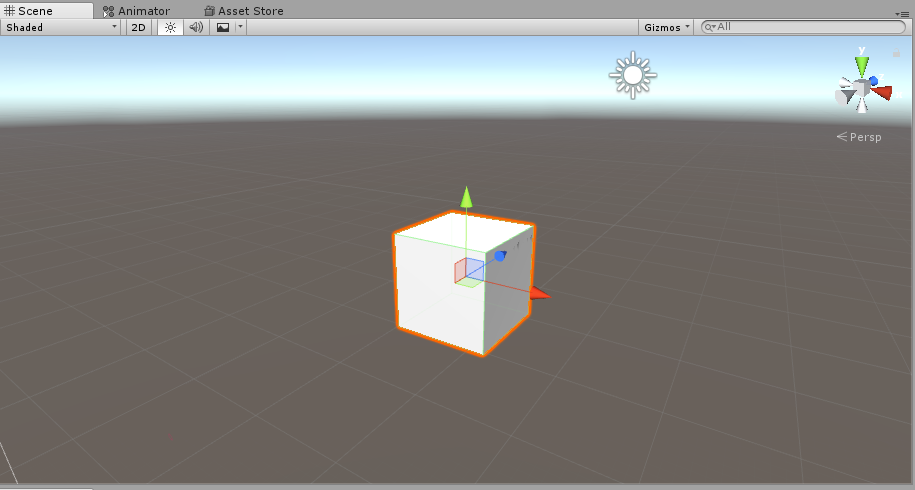

9. First, you need to build a cube in your scene.

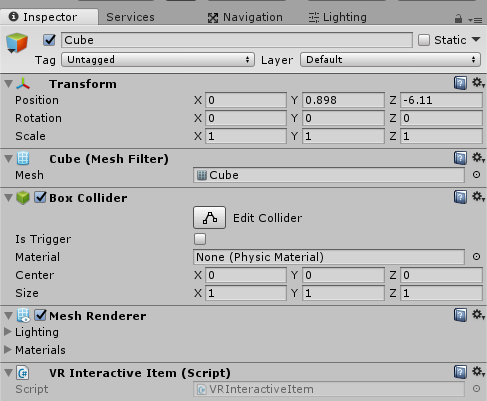

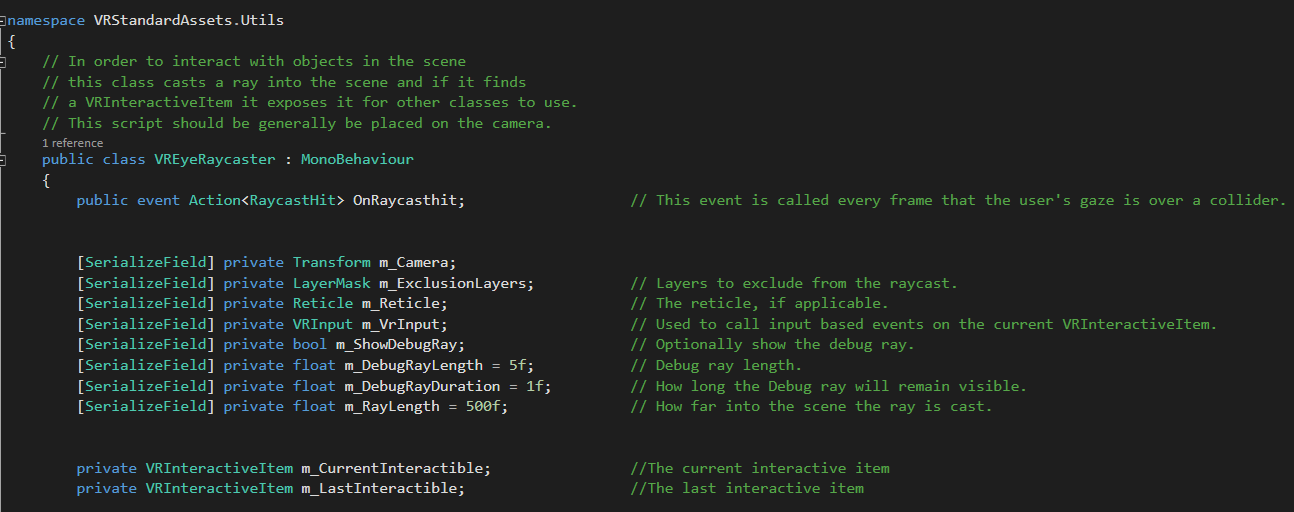

10. You need to drag the script “VRInteractiveItem” onto this cube. All the items you want to be interacted with needs to be attached with this script, and it will be interacted with “VREyeRaycaster”.

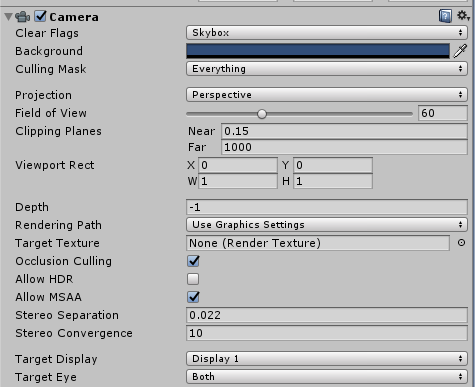

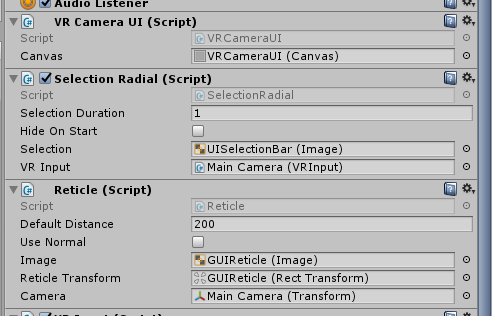

11. Then, we need to set up our main camera. You’d better set your camera like this.

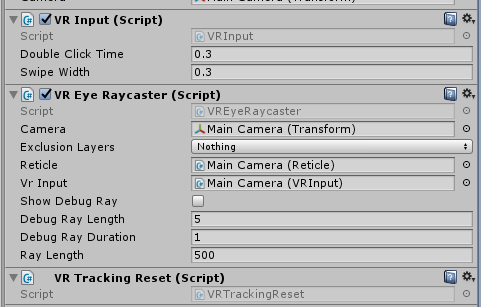

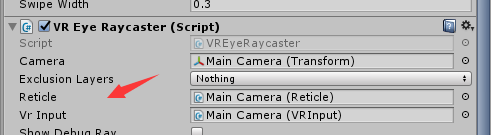

12. Then, you need to drag the script” VRInput”, “VREyeRaycaster” and “VRTrackingReset” onto your main camera.

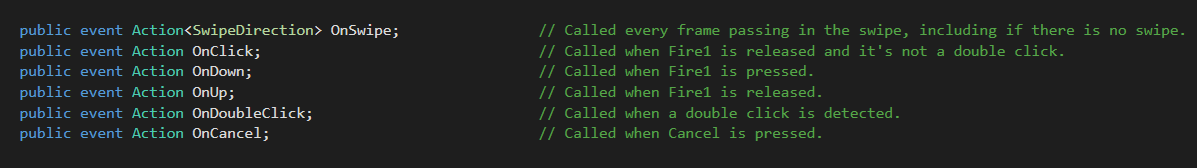

- VR Input is mainly used to catch what the users input into the game. It will catch the movement of the headset or the input of keyboard, mouse or game controller.

- VR Eye Raycaster is used to catch the ray starting from your eye. It can judge what direction you are looking at and is used to interact with other objects.

13. Then we need to build the reticle for our camera, then user can use it to do some interaction with objects.

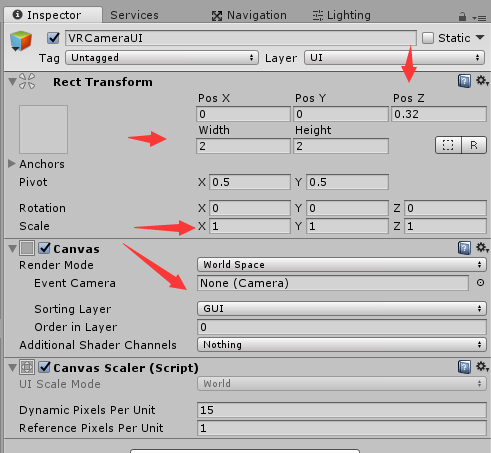

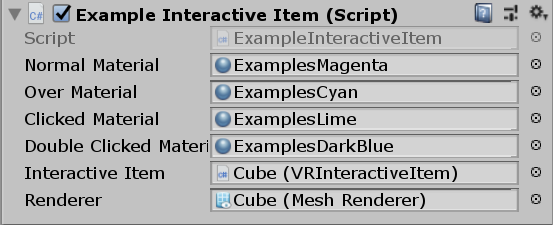

- First, create a canvas under the main camera and rename it as “VRCameraUI”. You need to set it like this and delete the component “Graphic Raycaster”. Note that to edit Rect Transform values, Render Mode in Canvas has to first be changed to World Space.

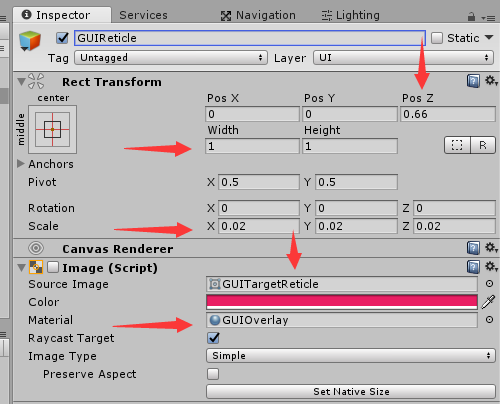

- Then you need to create an Image under VRCamreUI and rename it as “GUIReticle”. You need to set it like this.

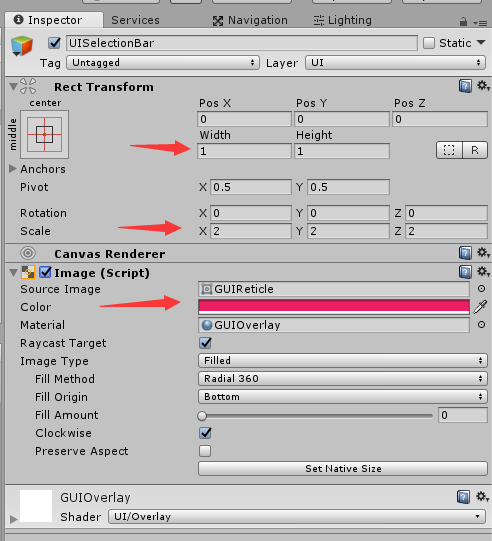

- Then you need to create an Image under GUIReticle named as “UISelectionBar”. You need to set it like this.

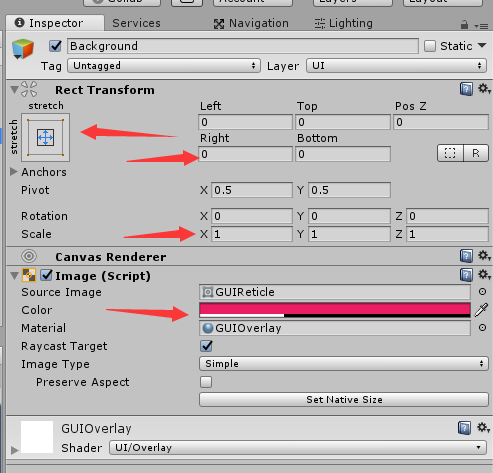

- What’s more, you need to create an Image under UISelectionBar named as “Background”. You need to set it like this.

The z value is used to put the UI a little bit before your camera, then you can see it in your camera.

It should be scaled as (0.02, 0.02, 0.02). It does not need to be large because it is near our camera.

14. As we have completed the basic of the reticle objects, we need to add scripts for them. Drag “VRCameraUI”, “SelectionReticle” and “Reticle” to your main camera and set them like this. (Selection Radial will not be used here.)

- Remember to drag Reticle to VREyeRaycaster.

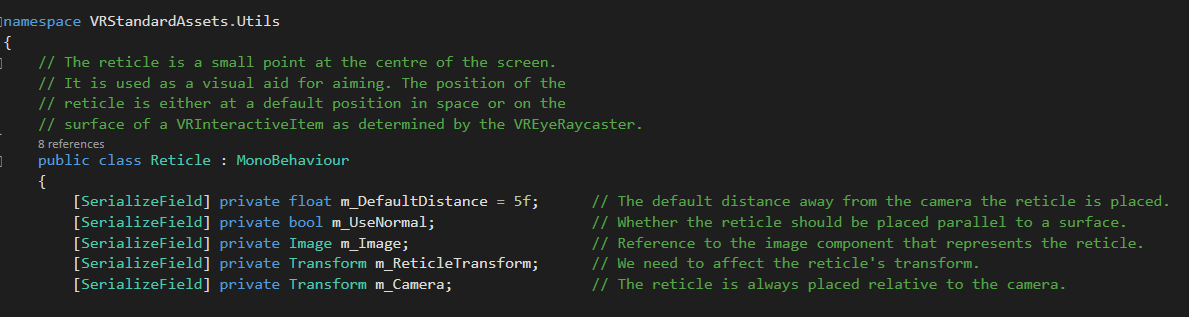

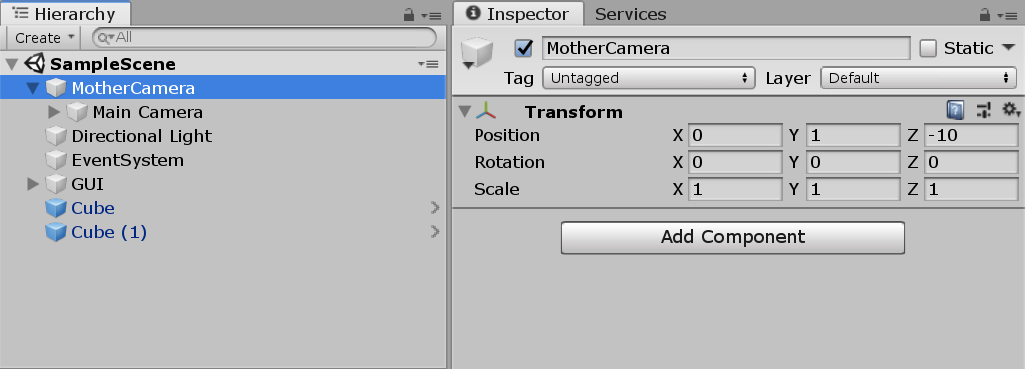

- Reticle is used to set the position of your reticle.

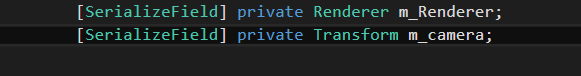

15. Then we need to write some scripts for the cube to respond to interactions from the user.

Note: Add "using UnityEngine.UI;" and "using VRStandardAssets.Utils;" at the start of the code, since we are referencing classes in those namespaces.

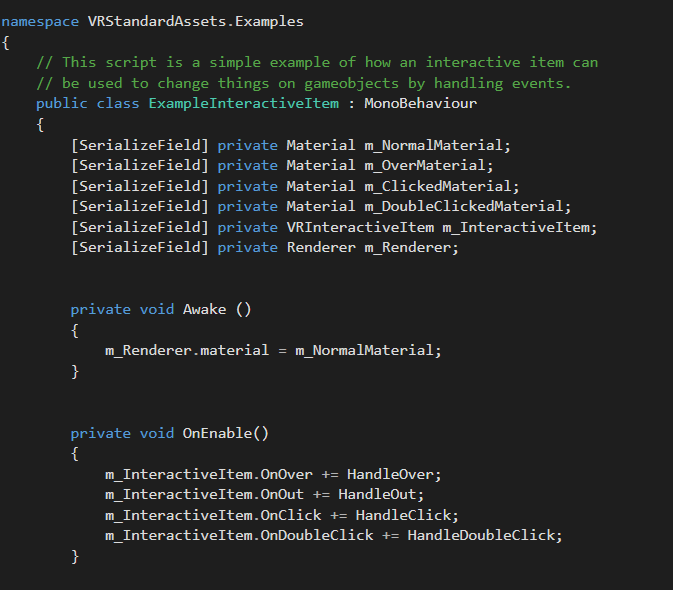

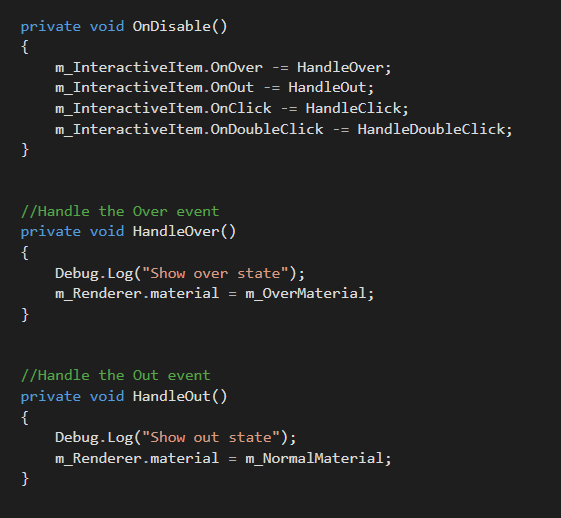

This script makes the cube to respond when the reticle moves onto or out from it, and when the user clicks or double clicks with the mouse.

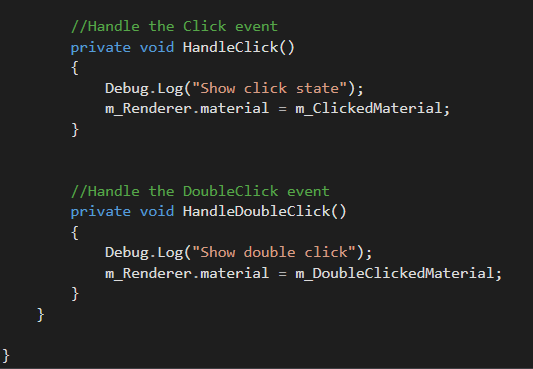

16. Now you can click play and test the interaction between your reticle and your cube. Add the "ExampleInteractiveItem" script to the cube, then set the references like this (Materials can be in any order you like).

If you like, you can set the cube as a prefab.

UI In VR

17. Now, let’s start to set some more UI for your VR game world.

18. The UI of VR is a little bit different from the common UI. The common UI is set on a 2D plane, then players can see them from there screen. However, in VR, we use our headset to view this world, so we need to set these UI objects in 3D world. Then, users can see these UI clearly.

19. To create UI for VR is similar to what we did in creating the reticle.

You need to create a canvas first in your scene. You’d better set its position with some small number and set it with some suitable width and height.

Remember to set the render mode as “World Space”!!!

20. Then you can set some UI component in this canvas. For example, you can set some text in it.

21. You can also do some UI like the interactive items to make the UI to have some response to your reticle. Also, you can add some UI like the reticle which can follow your camera.

Movement In VR

22. Then, let us see how to do movement in the VR world.

23. As we all know, if we just directly move our camera to realize the movement of players, it will make the players not comfortable because of the VR world. Therefore, we need some other methods to make the player move.

24. Here, we choose to use a portal to make player move in the VR world.

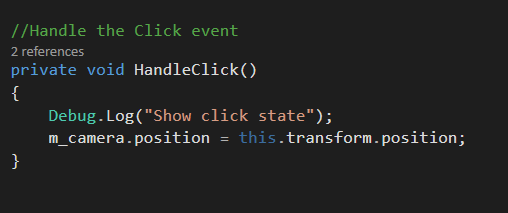

25. The portal chooses a new position for the camera and sets the camera to the new position. We can achieve this by simply changing the script of the cube.

- We need to add a new variable for the script

- Then we can simply change the HandleClick function.

26. Now create an empty object and place the main camera as a child of that object. Reset the transform of the main camera and set the position of the parent object to (0, 1, -10).

We will be moving this parent object for teleports, so set the camera reference for the Example Interactive Item script to this parent object.

27. After updating this function, you can test play your game. You will find that when you click at the cube, you will move to the position of this cube. To make sure you have changed the position, you can add one more cube as the reference.

28. Now, you have known the basic interaction, UI and movement development in VR world. You can also add some fade in and fade out when you do the movement in VR. You can add some image to the VRCameraUI to achieve the fade in and fade out to have a better movement effect. Then, you can start to do your Assignment 2.

Working with Oculus and Touch Controllers

29. Now, we will look at how to use Oculus Touch controllers with Unity. Go to the Unity Store by clicking Window > Asset Store or Ctrl + 9 and search for "Oculus Integration". Download and import it to your project. Make sure that all files are imported.

30. You will notice that there is now a "Oculus" folder under Assets. It contains a lot of useful scripts and prefabs that you can use with Touch controllers. However, in this tutorial we won't be using these high-level scripts, and will be creating our own for grabbing and throwing objects.

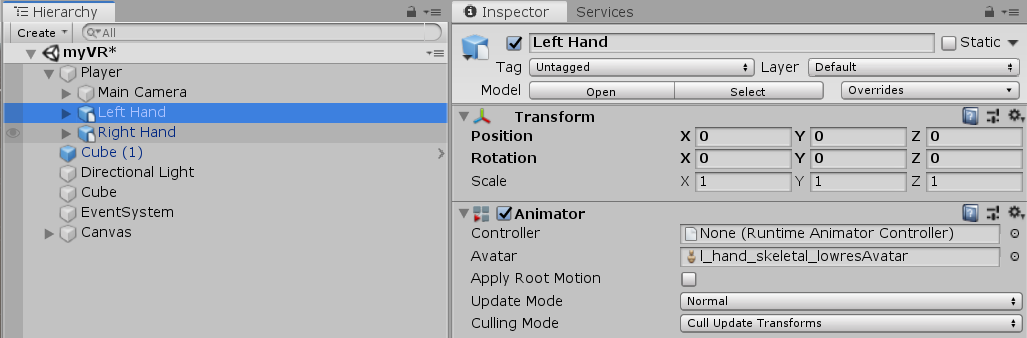

31. Navigate to Oculus > Sample Framework > Core > CustomHands > Models and find l_hand_skeletal_lowres and r_hand_skeletal_lowres. Drag them into the scene and rename them "Left Hand" and "Right Hand" respectively. Place them under the Player object (which is the Camera's parent). Then, set their position as (0, 0, 0).

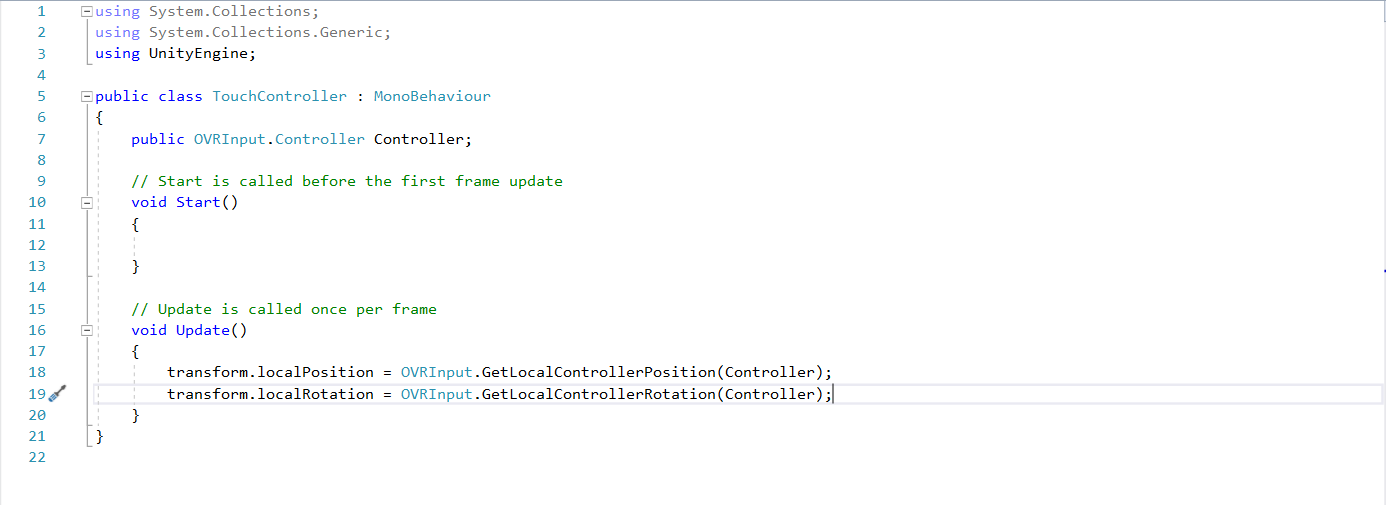

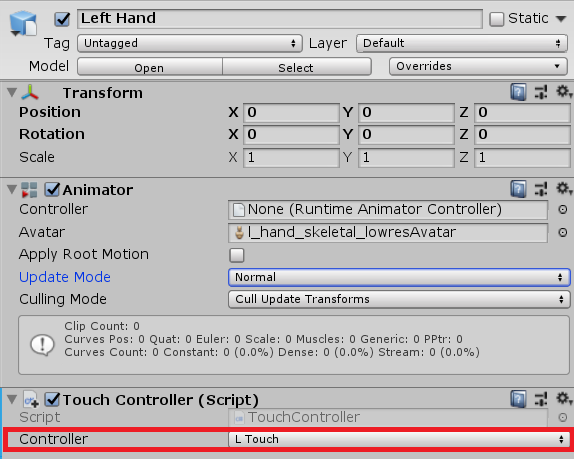

32. Now, we need to map the hands' movements to the controllers' movements. Create a script called "TouchController" as follows:

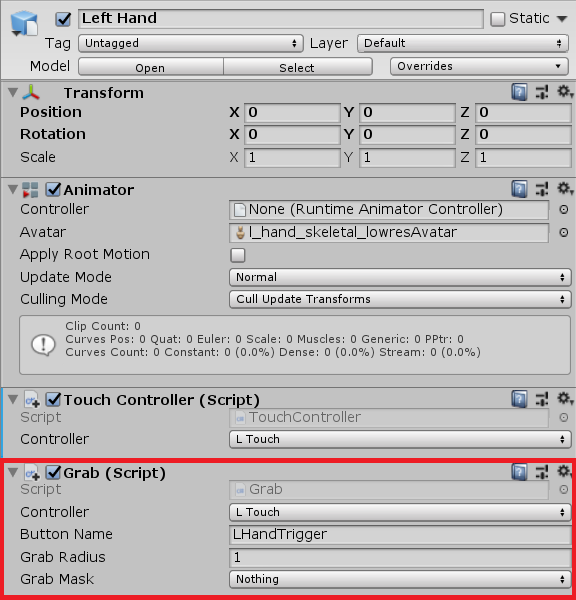

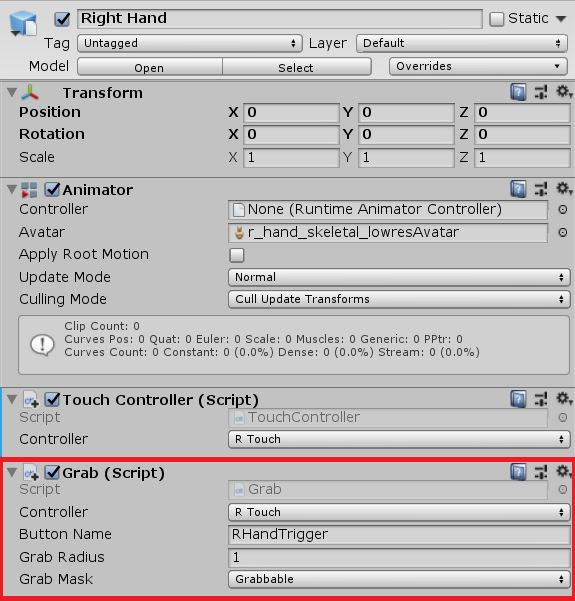

This script is not specific to the left or right controller so you can use it for both controllers. Return to the editor and add this script to both Left Hand and Right Hand. You'll notice that there is a public field where you have to set the correct controller corresponding to each hand. For Left Hand, select "L Touch" and for Right Hand, select "R Touch".

33. Now, go test out the touch controllers. The hands in the VR space should move and rotate according to the controllers.

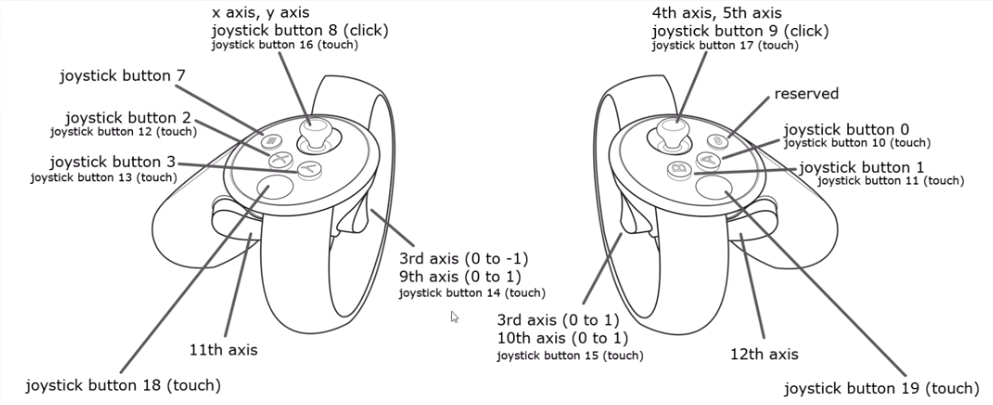

34. Now, we shall move on to the Grab function. First, we need to decide which button on the controller to use to grab objects. Refer to the diagram below to see the names of each button.

You can choose to use any button, but as a standard, grabbing objects is usually mapped to the 11th Axis and the 12th Axis, or the Left and Right hand triggers (Not to be confused with the Index triggers!)

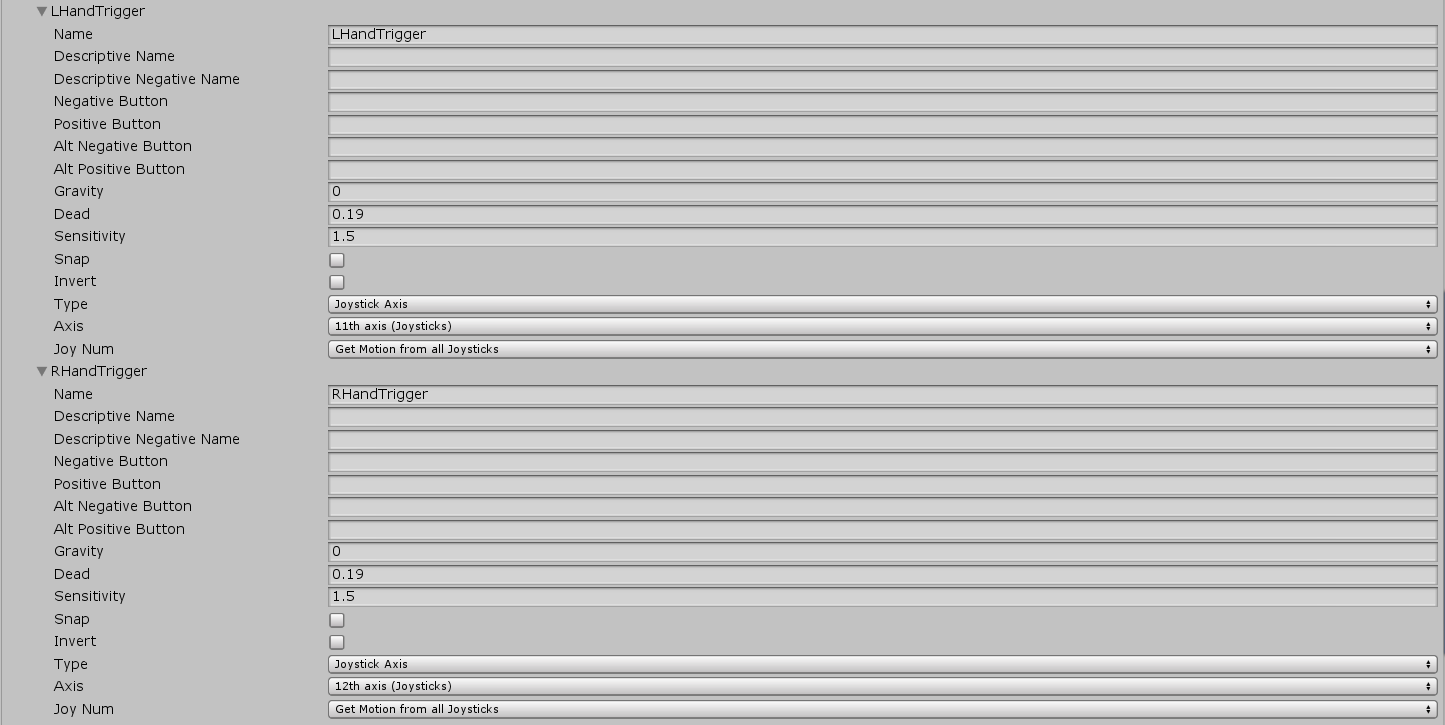

35. Now we need to map the buttons to an input. Go to Edit > Project Settings and click on Input. Create an input and name in "LHandTrigger". Set Gravity to 0, Dead to 0.19 and Sensitivity to 1.5. For Axis, choose "11th Axis (Joysticks)". Duplicate LHandTrigger and rename the copy to "RHandTrigger" and choose the axis to be "12th Axis (Joysticks)".

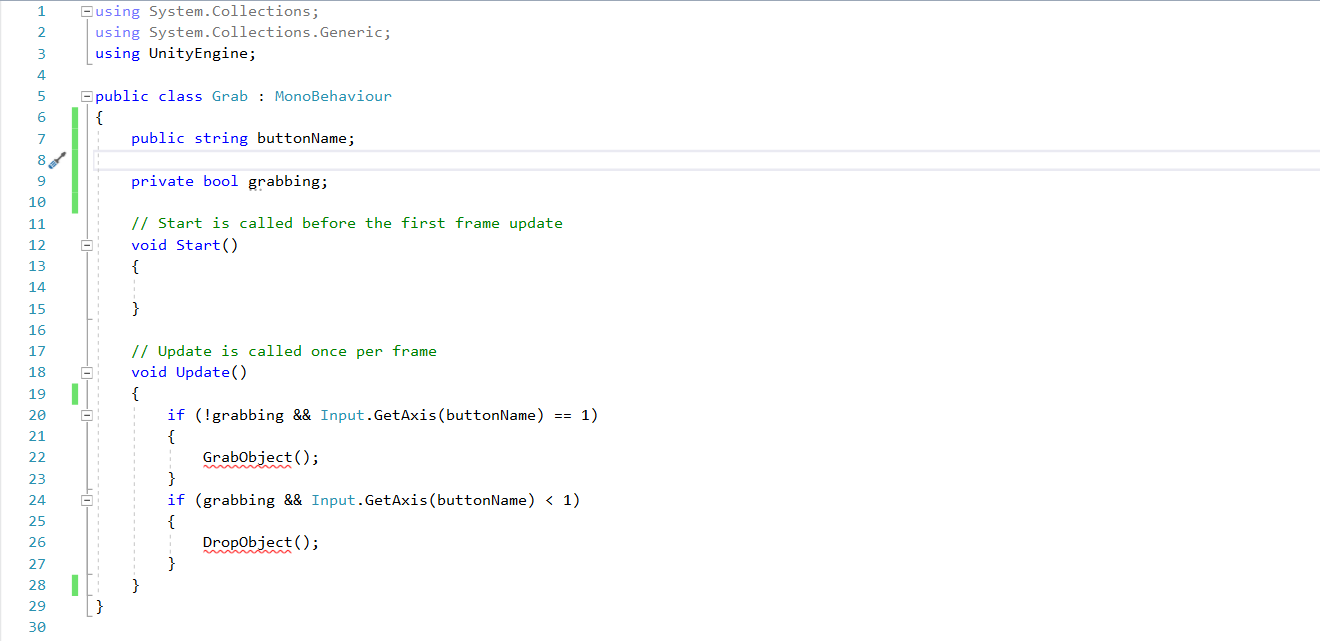

36. Create a script called "Grab". First, we want to keep track of whether the hand is currently grabbing an object, so we have a private boolean for that. In the Update function, we check if the hand trigger is being pressed and call the functions GrabObject() and DropObject(), which we will define later. The script should now look like this:

Note that the string buttonName is there so that the script isn't particular to either the left or right controller, just like for the TouchController script.

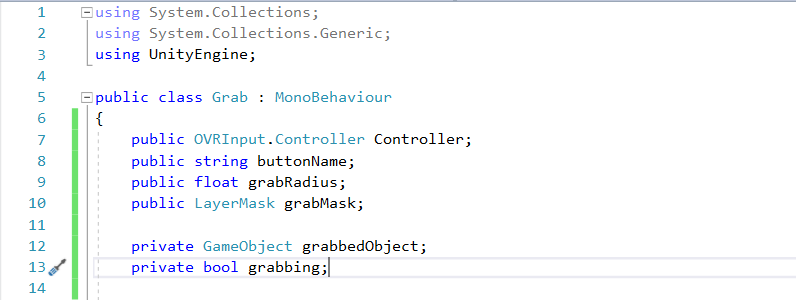

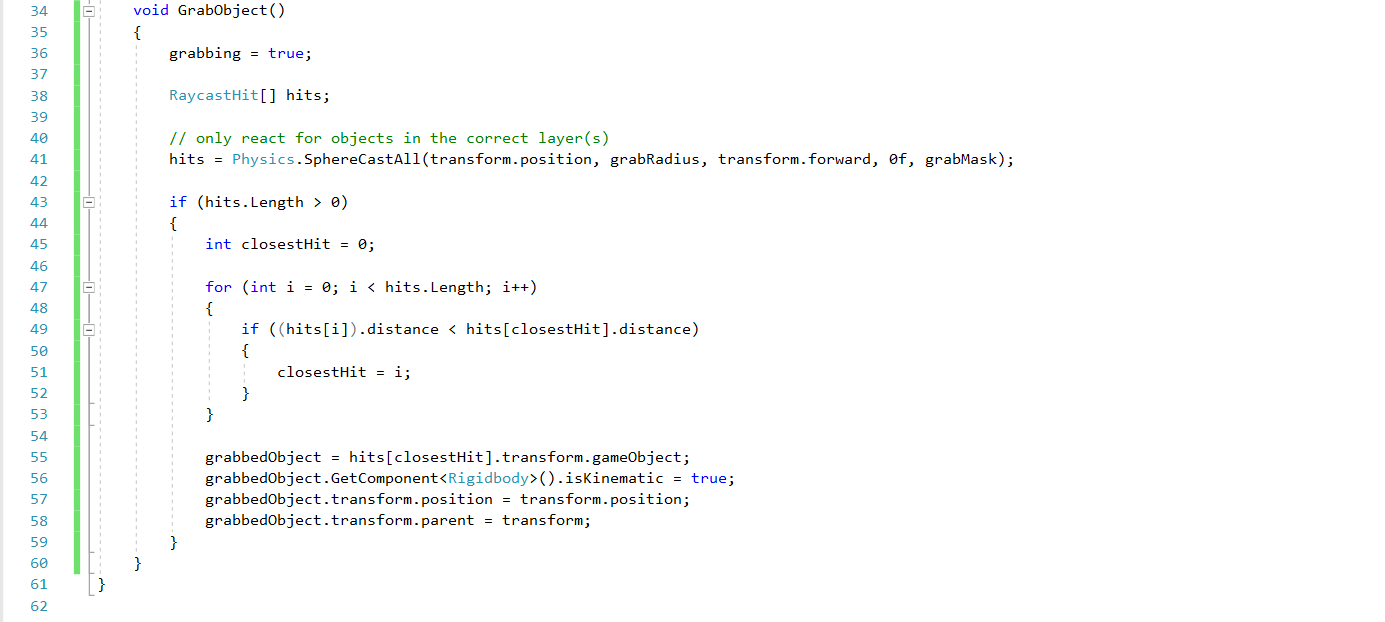

37. Now we move on to the GrabObject() function. It works by using a sphere cast to check for items around the controller in a sphere, then picks up the nearest object. Add some lines to the top of the script so that it looks like this:

Controller is to provide a reference to the left or right controllers, grabRadius is for the range of the sphere cast, grabbedObject is to keep track of the object being held, and grabMask is a layer mask such that only objects in that layer can be grabbed.

Next, we define the GrabObject() function:

Note that the rigidbody in the grabbed object is set to kinematic so that gravity doesn't work on it while it is being held, and also the grabbed object is set as a child of the controller so that they will move together.

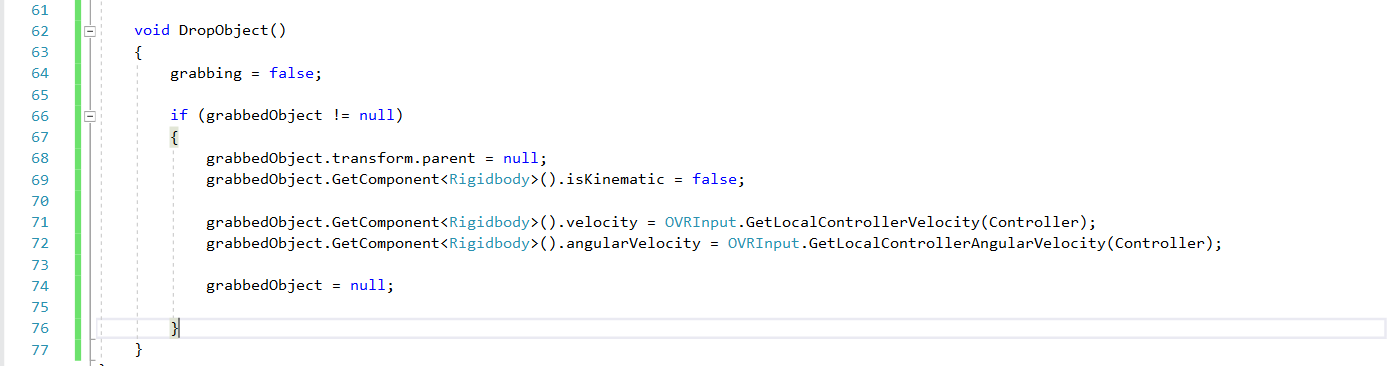

38. In the DropObject() function, we want to reverse everything that is done in the GrabObject() function.

39. Before we can use the script, we need to add a layer called "Grabbable". All objects that we want to be grabbed has to be in that layer.

40. Next, add the Grab script to both Left Hand and Right Hand. For Left Hand, set the Controller field as "L Touch", the Button Name field as "LHandTrigger", Grab Radius as 1, and Grab Mask as "Grabbable". For Right Hand, set the Controller field as "R Touch", the Button Name field as "RHandTrigger", Grab Radius as 1, and Grab Mask as "Grabbable".

41. Now, you can create any object, add a rigidbody, set the layer to "Grabbable", and test it in VR! Do remember to create a ground, if not the objects will just keep falling.

Note: If the touch controller input is not registering (due to bug) press the Oculus home button twice. More details af the fix is at: https://forum.unity.com/threads/oculus-touch-input-not-detected.546942/

Working with HTC Vive

42. You can also use the HTC Vive instead of the Oculus Rift. The advantage is that you can use trackers to track movement of feet, for example.

43. First, download the Introduction to HTC Vive - Starter from LumiNUS and open that Unity project. Then, go to the Asset Store, search for "SteamVR" and download the SteamVR Plugin. Import it into the project.

44. We will use the camera provided by the SteamVR plugin, so delete the Main Camera in the scene. Then, go to the folder SteamVR > Prefabs and find the [CameraRig] and [SteamVR] prefabs. Drag them into the scene. Set the position of the [CameraRig] to [0, 0, -1.1]. Now you can put on the HTC Vive headset, and you should see the controllers in the virtual space.

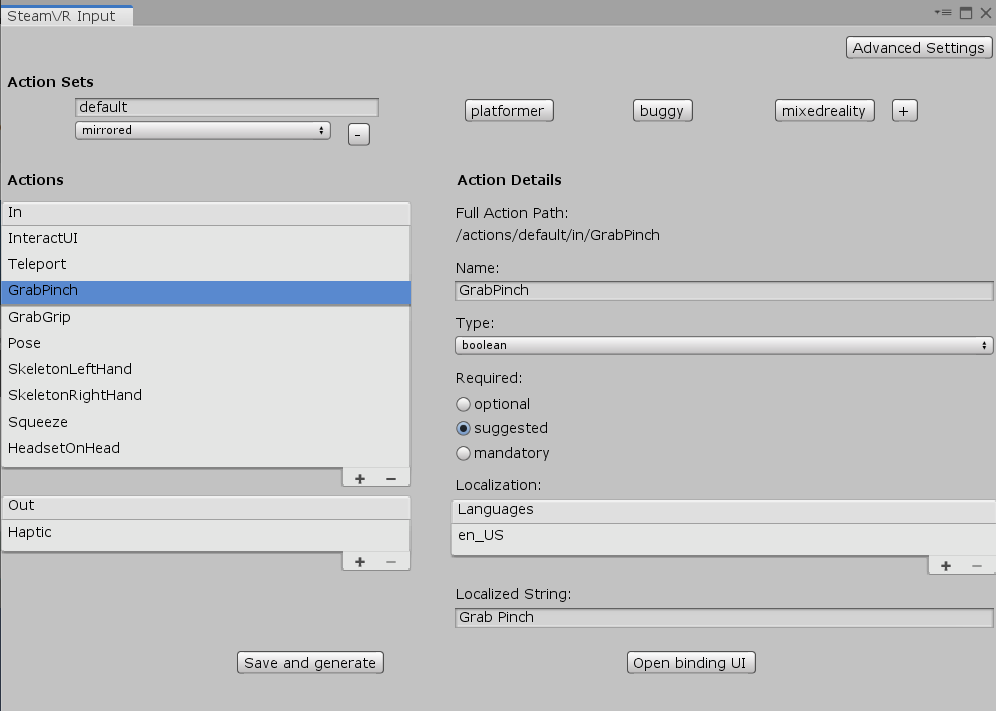

45. In SteamVR 2.0, you need to define Actions for the headset and controllers. Go to Window > SteamVR Input and you'll see something like this.

46. Now we will attempt to implment our own function for grabbing objects with the controllers. Under the default Action Set, select the GrabGrip action and rename it to Grab. Then, click Save and Generate.

You will notice that there will be a new SteamVR_Input folder under Assets that includes all the scripts you need.

47. Here is a diagram so you can familiarize yourself with the Vive controller inputs.

In this tutorial, we will be using the Grip button to grab objects.

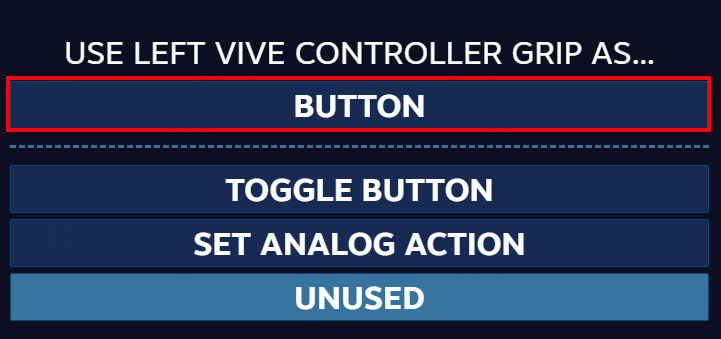

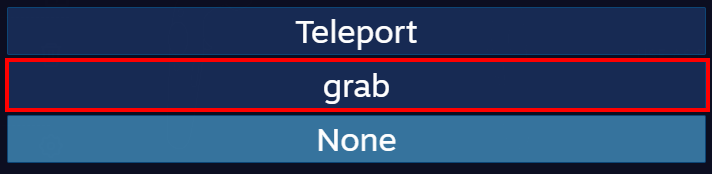

48. Now, we need to bind the action to the hardware. Make sure that SteamVR is open and click Open binding UI in the SteamVR Input window. A web page will open on the default browser. Under Current Controller, make sure that Vive Controller is selected. Then, below Current Binding, click on Edit. You will notice that there are already some default bindings. However, for the sake of the tutorial, delete all these inputs. Next, click the plus sign next to Grip. Select BUTTON in the window that pops up.

Another window will pop up with a list of possible actions. This time, select grab.

Click Apply on the bottom left to save the action set. Since the bindings are mirrored, this will apply to the right hand grip button as well. After that, click on Replace Default Binding to overwrite the default bindings.

49. Before using the grab action, we first need to link the controllers in virtual space to the physical controllers. Select Controller(left) under [CameraRig], then change the Pose Action to \actions\default\in\SkeletonLeftHand. Do the same for Controller(right), but select \actions\default\in\SkeletonLeftHand.

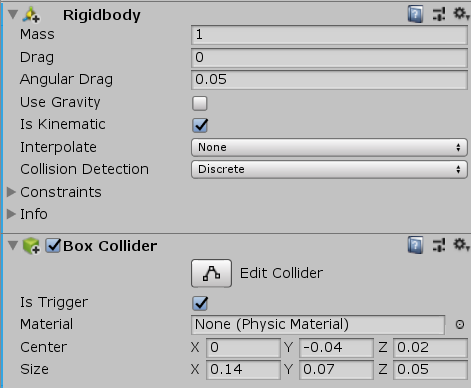

50. We will use collision triggers to hold onto objects. For that, the controllers and objects to be grabbed need rigidbodies and colliders. The balls in the scene already have them, so we can leave them alone. Add Rigidbody to both the left and right controllers. Check Is Kinematic and uncheck Use Gravity for both rigidbodies.

Next, add a Box Collider to both controllers and check Is Trigger. Set the center of the Box Collider to (0, -0.04, 0.02) and the size to (0.14, 0.07, 0.05). If you run the game and look at the controllers in the scene, you will notice that the box colliders are on the top of the controllers.

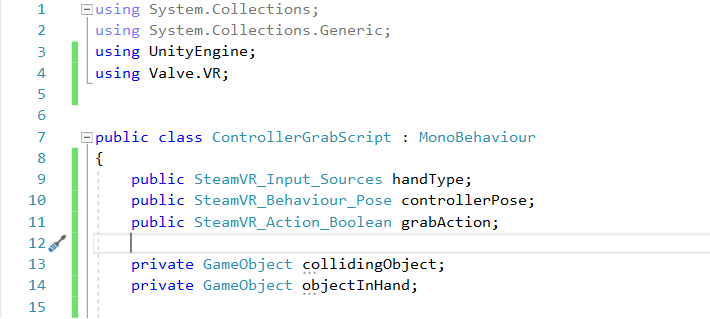

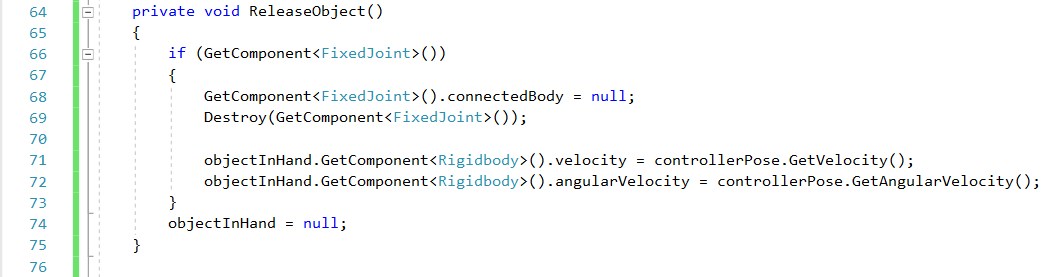

51. Create a script and name it "ControllerGrabScript" and make the following declarations:

The handType, controllerPose and grabAction store references to the hand type and actions, while collidingObject stores the GameObject the trigger is colliding with, and objectInHand serves as a reference to the object currently being grabbed.

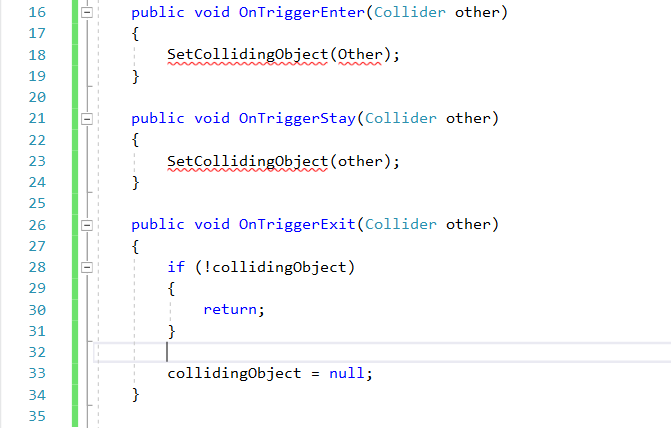

52. Next, add these trigger methods.

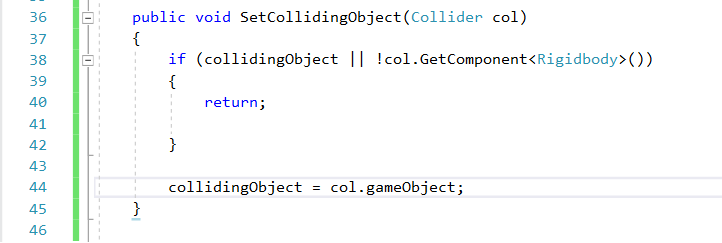

53. Then, we need to define SetCollidingObject.

As you can probably tell, this method sets collidingObject unless there is already a collidingObject or if the other collider does not have a rigidbody.

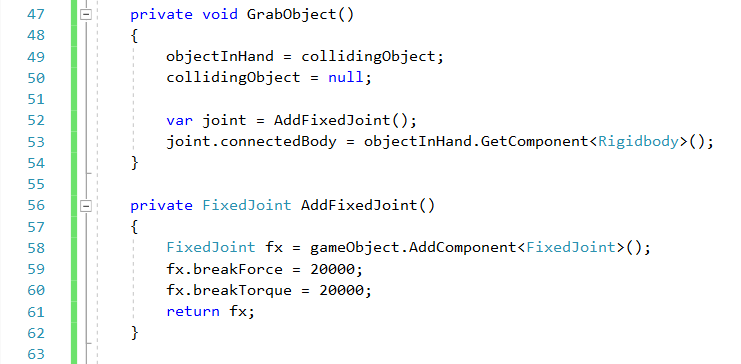

54. Now, we will add methods to grab and release objects.

Here, the FixedJoint connects the object to the controller.

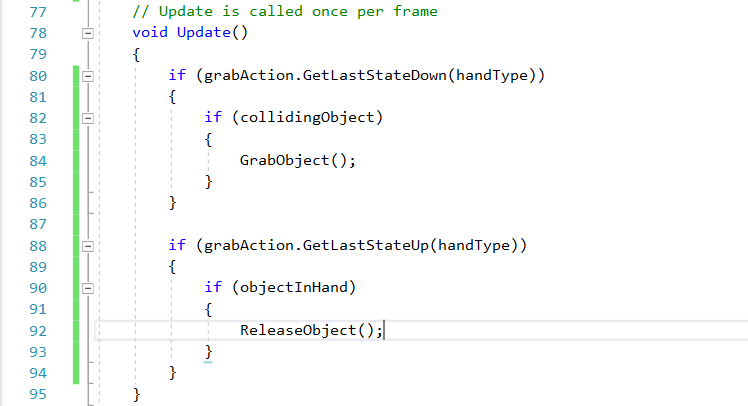

55. Finally, we just need to handle the controller input in Update.

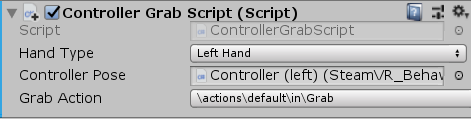

56. Now, go back to the editor and add ControllerGrabScript to each controller. There are some public fields we have to set. For the left controller, set Hand Type to Left Hand, Grab Action to \actions\default\in\Grab. Then, drag the Left Controller to Controller Pose. Repeat this for the Right Controller, but use the Right Hand.

57. Run the game and test it out with the HTC Vive headset and controllrs. You should be able to pick up and throw the cubes and balls in the scene.

Useful links

You are encouraged to learn many different VR techniques outside this tutorial, such as teleportation and other forms of movement. Here are some useful links:

Assignment 2

Your Interactive Experience

This assignment 2 is to build a VR game which covers what we have learned until now.

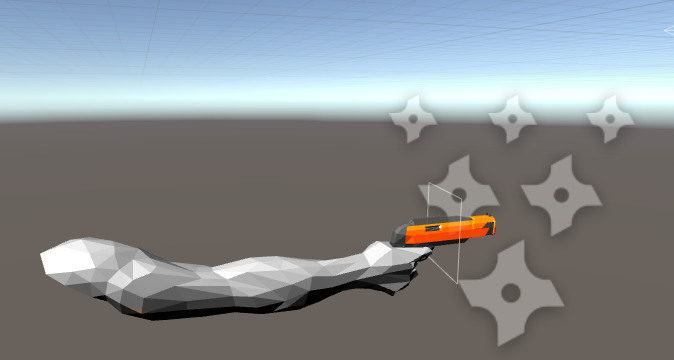

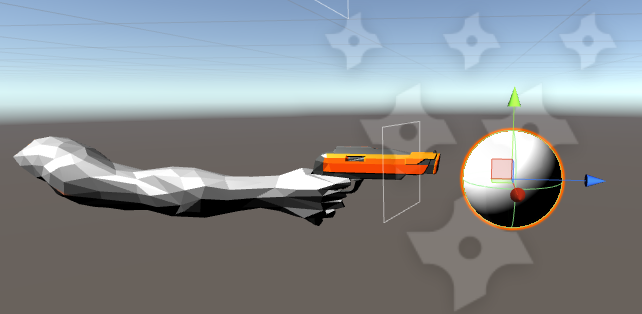

In this assignment, you need to build a shooting game. A weapon object is provided in the package VRBox. You should use this object to build the shooting game.

The game should fulfill the following requirements:

- 1. The game should use Touch Controller input instead of mouse input.

- 2. Your player is able to move in your scene with “portal”.

- 3. Your gun is able to pick up a ball object as the bullet. It should be like this.

- 4. There should be some targets in your scene, which are able to interact with the bullet. These targets can be static or moving.

- 5. As players fire the gun, the ball should fly out towards the target following some laws of physics. (Use Rigidbody and AddForce)

- 6. As your ball hits the targets, there should be some response form the targets, such as disappearance or fragmentation.

- 7. Also, as the targets are hit, there should be a score recorder to record the score. Implement a UI object to show the score.

- 8. Add some audio into this game to improve the sense of reality and immersion. You should at least have sound clips for picking up the ball, shooting, hitting target (target response). It is perfectly fine if you can build a scene with complex elements from asset store.

Your game should support VR and look conveniently in VR mode. Remember to set a good position or script for your camera!

Be creative and pay attention to VR design guidelines with respect to the following topics:

Positioning of objects with respect to the properties of fovea area, maximum viewing angle (lecture 2), space perception (lecture 3), depth cues/multiple depth cues (lecture 3) and perception consistency (lecture 3), sequencing and timing of events (lecture 3, self-study). [Use of audio is optional. Refer to this website if you like to play an audio clip in the scene.]

Grading Rubrics

The evaluation criteria for this miniProject (Assignemnt 2) is given below:

| Breakdown / Grading | Great | Okay | Bad |

|---|---|---|---|

| Controls (10%) |

Project uses Touch Controller. | - | Project uses mouse input / no input. |

| Movements (10%) |

Player is able to move in scene with “portal”. | Player is able to move in scene. | Player is unable to move in scene. |

| Gameplay – bullets (25%) |

Interactable bullets that follows the laws of physics on fire. | Interactable bullets that follows the laws of physics on fire, but with some error. | Project do not have interactable bullets. |

| Gameplay – targets

(25%) |

Contains both static and moving targets that provides excellent responses when hit. | Contains either static or moving targets that provides responses when hit. | Project do not have any targets. |

| UI (10%) |

Score recorder UI works perfectly and is placed appropriately. | Score recorder UI is implemented with few bugs and is out of place. | Project do not have a score recorder UI. |

| Audio (10%) |

Audio enhances the experience and immersion. | Audio meets the minimum requirement with sound clips for picking up the ball, shooting and hitting the target. | Project do not have any audio. . |

| Creativity (10%) |

Unique and engaging experience with refreshing ideas. | The student has put in adequate effort for the project. | The student clearly did not put in effort for the project. . |

Should you need any further clarification, feel free to contact the TA marking this miniProject.

Submission Guidelines

After completing your project, you need to select all your objects in Project panel and click Assets > Export Package to export what you used in your project.

You should share the hardware for this assignment. You can work together in lab, help-each other (within the group), but each one should implement a unique AR scene/ideas/objects.

What to submit:

XXX. unitypackage (Your game package)

InputManager.asset (Found in ProjectSettings folder)

README.txt (You need to write how to play your game and what downloaded packages or objects you have used in your project)

Zip these files into Your_Student_Number.zip and upload it to LuMINUS->Files->Assignment 2 Submission folder. The deadline is 21-Feb-2020 Extended to 26-Feb-2020, 11:55pm.